Table of Contents

Convolutional Networks

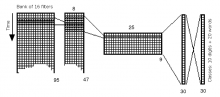

Time Delay Neural Networks

During the first years of my thesis, my main thema

was the construction of speech recognition systems using neural networks.

Kevin Lang and Geoff Hinton had published a tech report describing Time-Delay Neural Networks (TDNN).

Alex Waibel and his team then demonstrated their efficiency at discriminating

the japanese phonems /b/, /d/, /g/. But their approach was very costly. Training took weeks

on their Alliant super-computer.

During the first years of my thesis, my main thema

was the construction of speech recognition systems using neural networks.

Kevin Lang and Geoff Hinton had published a tech report describing Time-Delay Neural Networks (TDNN).

Alex Waibel and his team then demonstrated their efficiency at discriminating

the japanese phonems /b/, /d/, /g/. But their approach was very costly. Training took weeks

on their Alliant super-computer.

Using Stochastic Gradient Descent, I proposed a new and computationally efficient variant of Time-Delay Neural Networks. I was able to run speaker-independent word recognition systems on a regular workstation instead of a super-computer. This was later extended to continuous speech recognition system by combining a time-delay neural network and a Viterbi decoder. The combination was trained globally using a discriminant algorithm.

Convolutional Networks for Computer Vision

This work led to a long collaboration with Yann LeCun

on convolutional networks applied to a broad variety of problems in image recognition

and signal processing, using increasingly sophisticated

Structured Learning techniques. See also Yann's pages about

convolutional networks.

This work led to a long collaboration with Yann LeCun

on convolutional networks applied to a broad variety of problems in image recognition

and signal processing, using increasingly sophisticated

Structured Learning techniques. See also Yann's pages about

convolutional networks.