Table of Contents

Neuristique s.a.

Neuristique was founded in 1988 by a dozen friends with big dreams.

The mission statement was very long sentence that mentions

the application of artificial neural networks, the development of artificial brains,

and the exploration of space. We were very young and inexperienced.

Neuristique was founded in 1988 by a dozen friends with big dreams.

The mission statement was very long sentence that mentions

the application of artificial neural networks, the development of artificial brains,

and the exploration of space. We were very young and inexperienced.

This adventure had positive developments. Neuristique was in business from 1988 to 2003. The company was closed “in the black” because it structure was becoming too heavy for its activities. The technology developed by Neuristique is still used by leading companies around the world. The human side was more painful. The contrast between our inexperience and our ambitions has caused many disappointments. Friendships have been severed…

The SN Neural Network Simulator

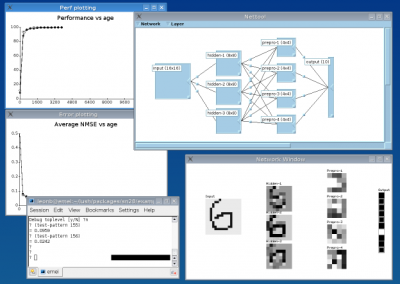

Neuristique's first product was the SN neural network simulation software. Yann LeCun and I initially wrote SN to support our research. We wanted a prototyping platform able to support ambitious applications of machine learning such as optical character recognition or speech recognition.

SN was basically a Lisp interpreter with highly

optimized numerical routines for simulating and training neural networks.

Lisp was chosen because we could write a compact and yet powerful interpreter

without compromising the speed of the system.

The very first version of SN already had a very fast stochastic gradient descent

and convolutionnal neural networks. Thanks to many people,

successive versions of SN acquired many features and libraries.

SN was basically a Lisp interpreter with highly

optimized numerical routines for simulating and training neural networks.

Lisp was chosen because we could write a compact and yet powerful interpreter

without compromising the speed of the system.

The very first version of SN already had a very fast stochastic gradient descent

and convolutionnal neural networks. Thanks to many people,

successive versions of SN acquired many features and libraries.

Leading R&D organizations around the world bought SN licenses. AT&T Bell Laboratories had its own internal version. SN programs were industrially deployed and are still running.

Around 1997, Neuristique released a version of the Lisp interpreter named TL3 under the GPL. In 2001, AT&T Labs released most of its modifications under the GPL. Yann and I then gathered all these incompatible freely available pieces and produced the latest descendant of SN: Lush. Almost twenty years after the initial version of SN, Lush was still going strong! The lack of GPU support makes it less relevant to the current developments.

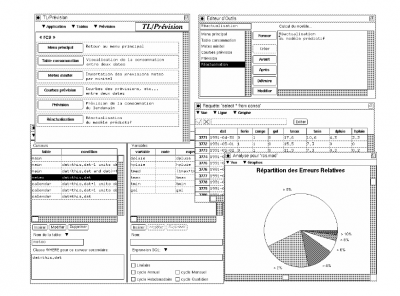

TL/Prevision and Early Data Mining

After writing my thesis and working with Vapnik in AT&T_Bell_Laboratories, it was clear that Machine Learning was a scientific revolution in the making. The TL/Prevision project started when we understood one of the business aspects of that revolution.

A few years earlier in École Polytechnique, Claude Henry gave a wonderful class about micro-economical models of public economy. Consider for instance the problem of funding a toll bridge. Since letting an extra car cross the bridge costs nearly nothing once the bridge is built, it seems reasonable to allow any car whose driver pays more than this small marginal cost. On the other hand, if everybody pays a toll equal to this small marginal cost, the cost of building the bridge will not be recovered. The best way to recover this initial cost would be to guess how much each driver is willing to pay for the benefit of passing the bridge. Therefore it is common practice to design complicated price structures based on a priori discrimination: commercial vehicles pay more, peak hours are more expensive, etc.

In mathematical terms, the market pricing is undermined by the nonconvex relation between the number of billable units and the total cost of the infrastructure. This applies to much more than building bridges. The nonconvexity appears whenever an activity benefits from economies of scale. Businesses as different as airlines, software development, telecommunications, or internet advertisement have in common that markets cannot determine a simple relation between the asking price and the level of service. In order to deploy other mechanisms, businesses must rely on a priori knowledge and on statistical data collected during their operation. This is in fact the fundamental driver for Data Mining and this is a good target for Machine Learning.

The TL/Prevision project took shape when I realized this connection. The idea was to develop an automated machine learning package able to collect data from the businesses'SQL databases and perform various predictive tasks. The internal algorithms were based on Locally Regularized Learning Systems and optimized using Hoeffding races (in fact TL/Prevision performs Bennett races) The software package was implemented by Neuristique using seed funds from ANVAR and was applied to a number of problems such as call center optimization, water demand prediction, and traffic prediction.

See some slides about the story of TL/Prevision.

Neuristique's Legacy

Despite our recognized technological leadership, the operation of Neuristique was dependent on the goodwill of its scattered founders. No decision could be taken without the consensus of its active founders. Every small disagreement took existential proportions. That was exhausting. I left Neuristique in 2000. Xavier Driancourt ran it profitably until 2003 when we decided that its business activity no longer required the relatively costly legal structure of the company.

Despite these disappointements, the legacy of Neuristique is very positive:

- Numerous machine learning algorithms have been developed with SN, including convolutionnal neural networks for image recognition or signal processing, and early implementations of Support Vector Machines.

- A SN based handwriting recognition system was used by many banks across the world to read checks. Some ATM machines made by NCR use compiled SN code running on embedded DSP boards. A version of this check reader was embedded in large check reading engines sold by NCR and OrboGraph. Over more than ten years, this system has processed about 10% of all the checks written in the US.

- The first prototype of the DjVu image and document compression system was written using SN, including the first decoder and the first foreground/background segmentation algorithms.

- The TL/Prevision technology was licensed to KXEN Inc. and helped defining their initial view of the business.

Acolytes

Here is a non exhaustive list of the Neuristique1) direct collaborators:

- Jean Bourrely

- Michel Diricq

Neuristique technologies also benefited from the contributions of many more people:

- …