Table of Contents

The Tradeoffs of Large-scale Learning

This lecture was prepared for the NIPS 2007 tutorial. Variants of this lecture were given at IPAM Google, Microsoft Research, the International Conference of Nonconvex Programming (NCP'07), the Conference Francophone sur l'Apprentissage Automatique (CAP'08), the NIPS workshop Optimization for Machine Learning (OPT'08) Symposium on Learning and Data Sciences (SLDS'09), and the International Symposium of Mathematical Programming (ISMP'09)

Summary

Pervasive and networked computers have reduced the cost

of collecting and distributing large-scale datasets.

Since usual machine learning algorithms demand computing

times that grow faster than the volume of the data,

computing time is now the bottleneck

in real life applications.

Pervasive and networked computers have reduced the cost

of collecting and distributing large-scale datasets.

Since usual machine learning algorithms demand computing

times that grow faster than the volume of the data,

computing time is now the bottleneck

in real life applications.

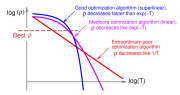

The first part of this presentation clarifies the relation between the statistical efficiency, the design of learning algorithms and their computational cost. The analysis shows distinct tradeoffs for the case of small-scale and large-scale learning problems. Small-scale learning problems are subject to the usual approximation–estimation tradeoff. Large-scale learning problems are subject to a qualitatively different tradeoff involving the computational complexity of the underlying optimization algorithms in non-trivial ways. For instance, a mediocre optimization algorithms, stochastic gradient descent, is shown to perform very well on large-scale learning problems. The second part makes a detailled exploration of stochastic gradient descent learning algorithms. Simple and complex experiments are discussed. The source code and datasets are available online. The third part discusses algorithms that learn with a single pass over the data.