Cold Case: The Lost MNIST Digits

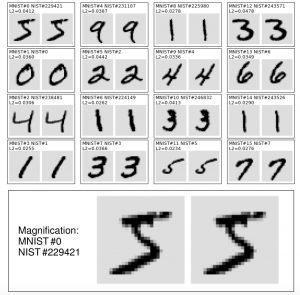

Abstract: Although the popular MNIST dataset [LeCun et al., 1994] is derived from the NIST database [Grother and Hanaoka, 1995], the precise processing steps for this derivation have been lost to time. We propose a reconstruction that is ac- curate enough to serve as a replacement for the MNIST dataset, with insignificant changes in accuracy. We trace each MNIST digit to its NIST source and its rich metadata such as writer identifier, partition identifier, etc. We also reconstruct the complete MNIST test set with 60,000 samples instead of the usual 10,000. Since the balance 50,000 were never distributed, they can be used to investigate the impact of twenty-five years of MNIST experiments on the reported testing performances. Our limited results unambiguously confirm the trends observed by Recht et al. [2018, 2019]: although the misclassification rates are slightly off, classifier ordering and model selection remain broadly reliable. We attribute this phenomenon to the pairing benefits of comparing classifiers on the same digits.

Abstract: Although the popular MNIST dataset [LeCun et al., 1994] is derived from the NIST database [Grother and Hanaoka, 1995], the precise processing steps for this derivation have been lost to time. We propose a reconstruction that is ac- curate enough to serve as a replacement for the MNIST dataset, with insignificant changes in accuracy. We trace each MNIST digit to its NIST source and its rich metadata such as writer identifier, partition identifier, etc. We also reconstruct the complete MNIST test set with 60,000 samples instead of the usual 10,000. Since the balance 50,000 were never distributed, they can be used to investigate the impact of twenty-five years of MNIST experiments on the reported testing performances. Our limited results unambiguously confirm the trends observed by Recht et al. [2018, 2019]: although the misclassification rates are slightly off, classifier ordering and model selection remain broadly reliable. We attribute this phenomenon to the pairing benefits of comparing classifiers on the same digits.

qmnist-2019.djvu qmnist-2019.pdf qmnist-2019.ps.gz https://arxiv.org/abs/1907.02893

@incollection{yadav-bottou-2019,

author = {Yadav, Chhavi and Bottou, L{\'{e}}on},

title = {Cold Case: The Lost {MNIST} Digits},

booktitle = {Advances in Neural Information Processing Systems 32},

editor = {Wallach, H. and Larochelle, H. and Beygelzimer, A. and Alch\'{e}-Buc, i F. d\textquotesingle and Fox, E. and Garnett, R.},

pages = {13443--13452},

year = {2019},

publisher = {Curran Associates, Inc.},

url = {http://leon.bottou.org/papers/yadav-bottou-2019},

}

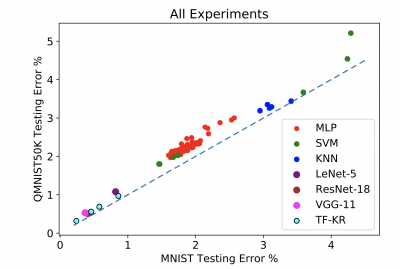

The final scatterplot

Each point in this scatterplot represents a machine learning system with its specific hyper-parameters trained on the MNIST training set. The main observation is that the performance of these systems on the new QMNIST50K testing examples is only slightly worse than their performance on the MNIST testing set.

Bernhard Schölkopf offered an interesting hypothesis about the main outlier, a SVM with a narrow RBF kernel, which is visible in the top right corner of the scatterplot. We know that pixel values in QMNIST training set images are essentially within ±2 units (out of 256) of pixel values for the corresponding MNIST training images. Using a very narrow RBF kernel gives SVM the ability to capture distribution shifts that only affect pixel values in this small range (we know they exist, see figure 4 in the paper.)

All these results essentially show that the “testing set rot” problem exists but is far less severe than feared. Although the repeated usage of the same testing set impacts absolute performance numbers, it also delivers pairing advantages that help model selection in the long run. In practice, this suggests that a shifting data distribution is far more dangerous than overusing an adequately distributed testing set.