Invariant Risk Minimization

Abstract:

Learning algorithms often capture spurious correlations present in the training data distribution instead of addressing the task of interest. Such spurious correlations occur because the data collection process is subject to uncontrolled confounding biases.

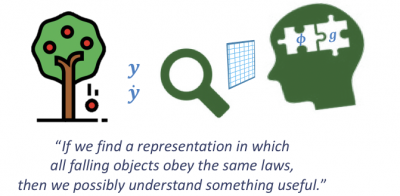

Suppose however that we have access to multiple datasets exemplifying the same concept but whose distributions exhibit different biases. Can we learn something that is common across all these distributions, while ignoring the spurious ways in which they differ? This can be achieved by projecting the data into a representation space that satisfy a causal invariance criterion. This idea differs in important ways from previous work on statistical robustness or adversarial objectives. Similar to recent work on invariant feature selection, this is about discovering the actual mechanism underlying the data instead of modeling its superficial statistics.

Learning algorithms often capture spurious correlations present in the training data distribution instead of addressing the task of interest. Such spurious correlations occur because the data collection process is subject to uncontrolled confounding biases.

Suppose however that we have access to multiple datasets exemplifying the same concept but whose distributions exhibit different biases. Can we learn something that is common across all these distributions, while ignoring the spurious ways in which they differ? This can be achieved by projecting the data into a representation space that satisfy a causal invariance criterion. This idea differs in important ways from previous work on statistical robustness or adversarial objectives. Similar to recent work on invariant feature selection, this is about discovering the actual mechanism underlying the data instead of modeling its superficial statistics.

@techreport{arjovsky-bottou-gulrajani-lopezpaz-2019,

author = {Arjovsky, Martin and Bottou, L{\'{e}}on and Gulrajani, Ishaan and Lopez-Paz, David},

title = {Invariant Risk Minimization},

institution = {arXiv:1907.02893},

year = {2019},

url = {http://leon.bottou.org/papers/arjovsky-bottou-gulrajani-lopezpaz-2019},

}

Links

- Léon's ICLR 2019 talk

- Karen Hao's article in MIT Tech Review

Notes

Although making this idea work at scale remains a thorny problem (https://arxiv.org/abs/2102.10867), we believe that it is too early to give up (https://arxiv.org/abs/2203.15516).