Table of Contents

ICML 2009

ICML 2009 took place in June.

Michael Littman and I were the program co-chairs.

Since we were expecting a lot of work, we tried to make it interesting

by experimenting with a number of changes in the review process.

Read more for a little explanation and a few conclusions…

ICML 2009 took place in June.

Michael Littman and I were the program co-chairs.

Since we were expecting a lot of work, we tried to make it interesting

by experimenting with a number of changes in the review process.

Read more for a little explanation and a few conclusions…

Motivations

It is usually accepted the increasing volume of submission tests the community's ability to perform high quality reviews. Without high quality reviews, the job of a program chair is hopeless. But what exactly makes a high quality review?

- Providing useful feedback to authors is useful, but does not make the conference good.

- Correctly selecting the most solid papers is useful, but does not make the conference exciting.

- Correctly selecting the really important new ideas is very difficult. Even the most experienced reviewers can miss them.

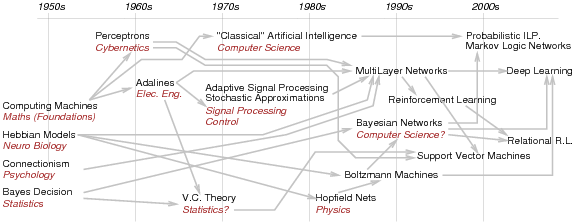

Spotting the important new ideas is difficult because new ideas appear when one takes a fresh point of view. Strong forces tend to restrict machine learning to the intersection of computer science and statistics. Yet machine learning grew from a great variety of fields. Who knows where the next breakthrough is going to come from?

In addition, machine learning permeates a number of applicative fields. Challenging applications can trigger conceptual advances because they force us to push the boundaries of our conceptual models.

Conflicting remedies

- A track-based review process increases the diversity. Unfortunately it also restricts the choice of reviewers, increases the reviewer load balancing, and therefore can decrease the review quality.

- A single pool of reviewer improves the load balancing. Since the reviewer assignment becomes difficult, many recent conferences let reviewers bid for the papers they would like to review. Algorithms then to produce a preliminary reviewer assignment. Unfortunately this process reduces the diversity because it favors papers that adopt the dominant point of views.

- Tightening the acceptance rates leads to negative reviewing. What about papers with new ideas?

- Relaxing the acceptance rates leads to reviewer frustration. This is a risky proposition for a well established conference.

Two rounds of reviews

In general, when a submission does not receive sufficiently informative reviews, the program committee seeks additional reviews from trusted reviewers. In recent years, a large fraction of accepted NIPS and ICML submissions have received one to three additional reviews!

We decided to formalized this practice by setting up a two round review process. Each paper received at least two first round reviews. The area chairs were instructed to immediately reject papers receiving negative first round reviews whose criticism surely could not be reversed by additional reviews. About one quarter of the papers were rejected at this stage. The remaining papers received one to three second round reviews.

Author's responses were sought after the first round. We would have liked to do the same after the second round, but the reviewing period was unfortunately too short. (The 2009 conference took place in June instead of July.)

Conclusions

Area chairs applied inconsistent standards for early rejection.

Early rejection has reduced the second round reviewing load. area chairs were usually able to assign the second round reviewers they wanted for each paper.

Area chairs had to read the papers early in the process. The second round reviewer assignments were very well targeted and therefore led to informative reviews. No additional reviews were necessary to make the decisions.

Reverse bidding

The best way to obtain high quality reviews is to assign the right reviewers. Unfortunately, this assignment is very labor intensive. As explained above, algorithmic solutions are not without problems. Therefore we chose to implement an original idea.

Each area chair first was asked to recruit a dozen reviewers. In the paper submission form, authors were asked to name preferred area chairs (three first choices, three second choices). The objective was to increase diversity by letting authors name the area chairs whose reviewers are most likely to appreciate their work.

The area chairs were asked to name the first round reviewers from their own pool of reviewers. On the other hand, area chairs were encouraged to pick second round reviewers without restrictions. To ensure that no second round reviewer was overloaded, we simply set up a web page showing in real time which reviewers were still available. Such a simple approach worked because the first round reviews and the early rejection had considerably reduced the second round reviewing load.

Conclusions

All but two papers were assigned to one of the area chair they listed.

Authors often placed poor bids for area chairs because of the confusion between the interests of the area chair and the competences of their pools of reviewers.

Assigning reasonable area chairs and simultaneously honoring the bids was very difficult.

First round reviewers often received papers that could be far from their main interests (but rarely from their competences.)

"Food for Thought" session

After collecting all the reviews, we observed that sorting the borderline papers always punishes novel ideas. Two reviewers pointing out obvious minor flaws usually prevail over a single reviewer excited by a new idea. Therefore, in addition to the papers that were selected by the normal process, we asked the area chairs to point out additional papers by weighting novelty and potential impact more than correctable flaws. These papers were grouped in an additional session called “Food for Thought”.

The impact of the papers published in this session remains to be seen. However we are proud to report that the session was packed. Since attendees and reviewers are the same persons, one wonder why these papers received such criticism.

Software

Running such experiments was not easy because they do not always match the design of existing conference management software packages. Area chairs and reviewers sometimes had to deal with contrived user interfaces; reviewer discussions were hampered by an email bug.

Yet we must warmly thank the SoftConf development team. We could not have implemented our experimental process without their continued efforts. Our software advice for future program chairs is to make sure that their choice is backed by a responsive team.