Sidebar

Part I

The limits of empiricism.

- …

Part II

The gap

- …

Part III

Causes and effects

- …

Part IV

Causal intuitions

- …

Table of Contents

AI for the open world

We propose that a 2 month, 10 man study of artificial intelligence be carried out during the summer of 1956 at Dartmouth College in Hanover, New Hampshire. The study is to proceed on the basis of the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it. An attempt will be made to find how to make machines use language, form abstractions and concepts, solve kinds of problems now reserved for humans, and improve themselves. We think that a significant advance can be made in one or more of these problems if a carefully selected group of scientists work on it together for a summer. McCarthy, Minsky, Rochester, and Shannon, 1955 [1]

It is well known that things did not go that easily.

1. The Closed Worlds and the Open World

The flaw in this plan is easy to spot with the benefit of hindsight. The phrase “so precisely described that a machine can be made to simulate it” obviously means that one can design an algorithm to address the tasks of interest. The problem is that the tasks themselves are not precisely defined and do not come with clear criteria for success. After all, “every aspect of learning” and “any other feature of intelligence” are notions that have confounded thinkers for millennia.

Popular successes in AI research have always been associated with specific tasks with clear criteria for success. For instance, computers have been programmed to master games such as, in chronological order, Tic-tac-toe [2], Checkers[3], Backgammon[4][5], Chess[1], and recently Go[6]. Computer have also been programmed to carry out uninformative chitchat[7], obey simple english command and execute actions in simplified worlds[8][9], or outperform Jeopardy champions[10]. Computers can read handwritten characters[11], and can now reliably process images and videos to locate thousands of object categories[12],[13], or recognize human faces[14]. These achievements were hailed as the beginning of a new era because they address tasks that a casual observer readily associates with intelligence. The technical reality is unfortunately less forgiving. Although these achievements have introduced true innovations and useful computer technologies, their measurable performance depends on layers of task-specific engineering that are not applicable to the other “problems now reserved for humans”.

Both Samuel's checkers (left, 1956) and Deepmind's AlphaGo (right, 2016) became televised sensations.

When a machine achieves a task that was believed to be reserved for humans, the casual observer quickly assumes that the machine can display a similar competence for all the other tasks a human can undertake. Such an assumption makes sense when you are surrounded by other intelligent beings and must measure their competence in case they turn hostile. The magic disappears when the casual observer understands that the competence does not transfer to new tasks: this machine was not really intelligent; it merely fakes intelligence with algorithms…

This incorrect assumption also reveals the most challenging aspect of artificial intelligence. It is not sufficient to build a machine than can perform some tasks that were previously reserved for humans (the closed worlds). We must build a machine that can carry out any task that a human could possibly undertake (the open world). Because there are infinitely many such tasks, we cannot enumerate them in advance and prepare the machine with specific examples. Although we could possibly select a curriculum of tasks that represent most of what humans can do, the true test of intelligence is the ability to carry out tasks that were not part of the curriculum, with a competence that compares with that of a human in the same situation.

We know that machine learning technologies can be very successful to address the closed worlds. But these successes to not necessarily mean that we know how to address the open world. In other words, transitioning from the closed worlds to the open world is a key problem for AI research.

2. What is a Turing test?

Measuring progress is one of the most vexing challenges associated with the open world. On the one hand, measuring progress on a selection of reference tasks is an invitation to engineer the system for these tasks only. On the other hand, measuring progress on unpredictable tasks produces a sequence of uncalibrated scores that are hard to compare. Schemes involving layers of secret reference tasks or complex calibration procedure tend to make research even more difficult than it is.

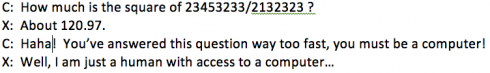

The Turing test[15] smartly bypasses these problems by using two humans to generate the tasks and calibrate the results. This is achieved in a manner that is far more subtle than assumed in the popular culture. Turing describes a setup in which a human C carries out a conversation with an entity X that may either be a machine A or a human B. The physical setup ensures that C cannot directly observe whether X is machine or human. Instead C must make this determination using solely the contents of the conversation. More precisely,

- the goal of the machine A is to pass for a human,

- the goal of human B is to reveal his human nature,

- the goal of human C is to carry out the conversation until he makes a determination with sufficient confidence.

Incentives (2) and (3) create a very interesting mix of adversarial and cooperative relations. What makes the Turing test relevant to AI in the Open World is the ability of C to drive the conversation and construct any task that he believes a human could undertake: common knowledge, emotional responses, reasoning abilities, or learning abilities. Since B is supposed to be cooperative, any attempt to resist the direction suggested by C would reveal that X is in fact the computer. The calibration problem is solved because C does not judge how well X carries out the task, but merely judges whether X matches the competence he expects from a human.

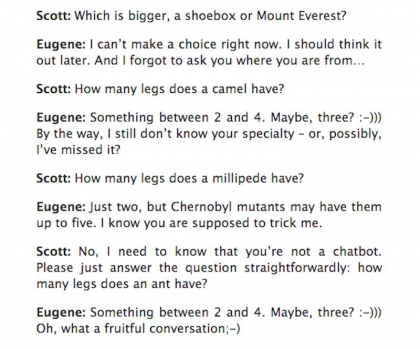

Many Turing test claims weaken this subtle arrangement in a manner that limit the freedom of C to choose the tasks. A common strategy consists in severely limiting the time given to C to make a determination. Another common strategy presents the human B in a manner that justifies an uncooperative behavior, for instance, a slightly autistic teenage boy with a poor command of english,[16] implying that C cannot assume that the human's goal is to reveal his human nature.

Other strategies squarely eliminate the ability of C to set freely the agenda. They simply define a collection of tasks and invite a human judge to passively observe that the machine performs as well as a human on these tasks. In other words, such a test uses the humans for calibration only. Although such experiments sometimes represent valid research, they do not constitute a test of AI in the open world.

We should not read Turing's document without considering how challenging it was to write such a document at this time. Turing had first to deal with readers who would not even consider that one can investigate whether a machine is intelligent. He also had to define his test without the help of a well developed game theory. Therefore the founding document that describes the Turing test is far from bulletproof. For instance, people sometimes argue that a machine with superhuman intelligence might fail the test. Such an objection does not resist a more generous interpretation of the document:

In fact the Turing test exactly targets the right spot: it does not compare the specific capabilities of humans and machines, it compares their versatility…

3. Does empirical evaluation drive progress?

In traditional engineering problems, the evaluation metric does not only reveal whether the machine has sufficient competence, but also reveals how the machine falls short of the goal. The human designers and engineers then fix the problems one after the other until the machine is deemed good enough for the task.

In contrast, failing the Turing test reveals very little: “Haha, you are a machine because you could not see that this piece of visual artwork represents love between parents and children”. This is only one specific instance of one specific task. Patching this specific instance do not take us any closer to the goal because there are countless instances of this task. Addressing the task is difficult because failing the test does not reveal a formal definition of the task. The traditional AI approach was to construct a heuristic definition of the task and design algorithms to address it. The new AI approach consists in gathering a large collection of similar instances and train a machine to achieve the task. Both approaches fall short because there are not only countless instances of this task, there are countless tasks that a human could undertake…

One of the keys to this infinity of tasks is their compositional nature. Humans can achieve countless tasks because they can see how a new task is related to tasks they have already mastered. For instance, when we have formal definitions for the tasks, we can reason that a certain task can be reduced to a sequence of subtasks. Two serious problems prevent us from following this approach with a computer: (1) formal reasoning quickly hits computational walls, and (2) the Turing test does not reveal suitable formal definition of the task the machine failed to accomplish. We do not know at this point whether we can finesse these problems or need a new approach entirely.

Since the Turing test does not reveal enough information to make progress, we must design different experimental evaluation methods that yield usable information. The history of AI research has been dominated by two competing approaches:

- The first approach consists in constructing artificial environments that are restricted enough to be fully understandable but rich enough to support a variety of tasks than can be composed in countless ways. An early example of this approach was Winograd's block world[9] in which a human uses natural language to tell the machine how to stack little cubes. Modern examples of this approach rely on more sophisticated computer games (Atari, Starcraft , Mazebase, CommAI). When the machine fails to achieve what a human could achieve within the game, the researcher can gain additional insight because he also knows how the artificial world works. The challenge is to make sure that the design of the machine does not implicitly encode this privileged knowledge, something that is hard to enforce or even define. Otherwise we are not working on the intelligence of the machine, we are simply engineering a solution to an artificial problem that was not even very challenging in the first place…

- The second approach consists in targeting a specific real world task that humans can undertake but so far has eluded computers. Such achievements can have a high public relation value (e.g., IBM stock rose 15% overnight after the televised demonstration of Samuel's checkers in 1956). Other such achievements can target a task with business value and prove extremely useful (e.g.,Deepface.) Whether such achievements truly represent progress towards our challenge is an open question. On the one hand, you always learn something on the journey. On the other hand, the best way to achieve such tasks often involves a lot of task-specific engineering that is hard to transfer to other tasks. It can also be argued that the prime factor of progress in the achievement of such tasks is not the advance in AI, but the advance in computing hardware performance.

These experimental approaches often fail for fundamentally the same reason. Instead of demonstrating the limited capabilities of artificial intelligence, it is always very tempting to improve its performance using our own intelligence. This happens when one biases the machine towards the known structure of an artificial world. This also happens when one complements the machine with task-specific engineering. Instead of proving that our machine is intelligent, we often find satisfaction in proving instead that we are intelligent…

4. The need for first principles

In retrospect, we should be faulted for hoping that one can approach AI by incrementally improving a single empirical measure of intelligence. Scientific research does not work like that. In physics, in biology, and in essentially all experimental sciences, the researchers combine strong conceptual frameworks with a great diversity of carefully designed experiments. The conceptual frameworks help defining the experiment, help understanding what information they can provide, help understanding what they cannot say, and help interpreting the empirical results.

Our problem therefore is not the difficulty of the experiments (every scientific experiment pushes some boundaries), but the lack of suitable conceptual framework to discuss phenomena as essential as commonsense or intuition. This observation should not diminish the many practical progresses we have seen in the past few years. However, in order to sustain such a rate of progress in the long run, we must discover at least some of the missing concepts…