Table of Contents

Fast Kernel Learning Algorithms

This is a subtopic of Large-Scale Learning.

Kernel machines are often associated with dual techniques that implement very large parameter spaces at the expense of scalability in the number of examples. However, kernel machines can scale nicely by cleverly approximating the conventional optimization problem solved during learning. These ideas are not specific to kernel machines and are being actively explored in the context of other learning algorithms. Starting with relatively well understood kernel machines has provided a sounder way to assess their impact on the statistical efficiency of learning algorithms.

LaSVM and related algorithms

Earlier work has established that second-order stochastic gradient learning algorithm need only a single pass over the training data to achieve a generalization performance comparable to that of an exact optimization algorithm. Alas second-order stochastic gradient algorithm become quite memory intensive when then dimension of the problem increases.

Reprocessing training examples is a simple workaround. Whenever we process a new training example, we can also return on the past training examples in order to approach the performance of a full optimization on all the training examples, including the new one. Of course storing all these examples could be extremely inefficient. We found that we can store a small subset of the past training examples that summarizes them well. Furthermore, after processing a new training example, we found sufficient to only reprocess a couple stored examples to recover, on average, the desired level of performance.

In the case of a Support Vector Machines, the obvious choice consists of summarizing the examples using the Support Vectors. We designed a sequence of online SVM algorithms, the Huller, LaSVM, and LaRank. These algorithms reach generalization peformances comparable to the batch SVMs after a single pass over the training examples.

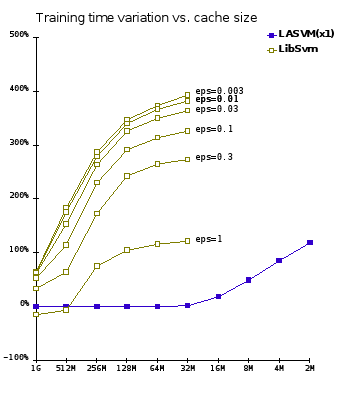

Most training examples will only be seen once by the learning algorithm. This property considerably reduces the memory requirements of kernel algorithms. As a consequence these algorithms scale to datasets containing millions of examples.

Large-scale active learning

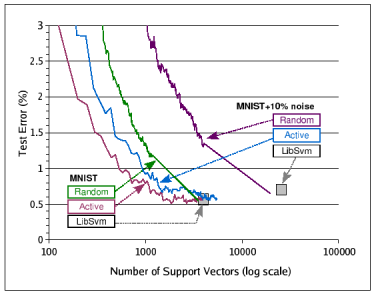

Since we can summarize the past training examples using a well chosen subset, we can also consider selecting each new training examples. Instead of performing a sequential pass over the training set, we can actively pick informative training examples, that is, examples located in the vicinity of the decision boundary. This active learning strategy solves two problems that usually plague support vector machines.

- Support vector machines trained on noisy datasets typically have many support vectors. Active learning support vector machines remain sparse on noisy datsets and even enjoy a slight performance advantage because of the increased sparsity.

- Support vector machines trained on datasets with imbalanced classes have degraded performance. Active learning support vector machines maintain their performance because all datasets are balanced when one selects examples near the decision boundary.

Collaborators

Nips Workshop: Large-Scale Kernel Machines

There is a tension between the challenges of large-scale datasets and the relative slowness of the standard kernel machines algorithms. To make them fast, one needs to bring new ideas. This was the starting idea of the NIPS 2005 workshop on Large-Scale Kernel Machines, organized by Olivier Chapelle, Dennis DeCoste, Jason Weston, and myself.

We were not disappointed. The Large-Scale Kernel Machines book progresses from well-understood techniques to increasingly controversial topics. For instance, the last chapter considers problems that animals perform effortlessly, such as perception and control, and argue that local kernel representations are inefficient for these problems. Meanwhile, this argument might not apply to other relevant tasks, such as mining transaction logs. Are these classes of problems different enough to justify distinct scientific approaches?