Wasserstein Generative Adversarial Networks

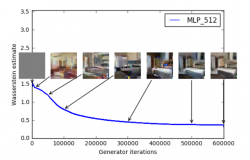

Abstract: We introduce a new algorithm named WGAN, an alternative to traditional GAN training. In this new model, we show that we can improve the stability of learning, get rid of problems like mode collapse, and provide meaningful learning curves useful for debugging and hyperparameter searches. Furthermore, we show that the corresponding optimization problem is sound, and provide extensive theoretical work highlighting the deep connections to different distances between distributions.

Martin Arjovsky, Soumith Chintalah and Léon Bottou: Wasserstein Generative Adversarial Networks, Proceedings of the 34nd International Conference on Machine Learning, ICML 2017, Sydney, Australia, 7-9 August, 2017, 2017.

@inproceedings{arjovsky-chintala-bottou-2017,

author = {Arjovsky, Martin and Chintala, Soumith and Bottou, L\'{e}on},

title = {Wasserstein Generative Adversarial Networks},

year = {2017},

booktitle = {Proceedings of the 34nd International Conference on Machine Learning, {ICML} 2017, Sydney, Australia, 7-9 August, 2017},

url = {http://leon.bottou.org/papers/arjovsky-chintala-bottou-2017},

}

Code is available at https://github.com/martinarjovsky/WassersteinGAN.